Using object tracking to combat flickering detections in videos

How to decrease the amount of flickering detections in videos with object tracking.

18 April 2024Ask a question

Introduction

Contemporary machine learning models display impressive precision levels, often rivaling even human perception. Yet, achieving such precision often necessitates trade-offs. The higher the precision, the longer the model's inference time and the greater its demand on video memory. These factors can pose significant challenges, particularly when deploying solutions in the cloud. Some can require up to 24 GB of video memory, pushing the limits of even high-end GPUs like the RTX-4090, priced at 1800 Euro. Deploying software on such costly hardware inevitably leads to soaring operational expenses. Compounding the issue, larger models tend to exhibit diminishing performance, necessitating additional running instances. Consequently, deploying large models becomes financially prohibitive.

This trend prompts developers to turn to swift and small models. At Celantur, we are trying to use models that don't take more than 5 GB of video memory. However, our clients entrust us with ensuring the meticulous anonymization of personal information, demanding exceptionally high recall rates. In other words, they expect minimal amount of false negatives (undetected objects). Naturally, one can't use small models and hope for exceptionally high recall rates and this leads to a huge problem.

The problem

Consider a scenario where we have a model that successfully detects an object 999 times out of 1000. Let's apply this model to anonymize dashcam footage of a trailing car with a frame rate of 60 frames per second (fps). On average, every ~16 seconds of video, we may miss capturing the license plate of the leading vehicle. Regardless of the efficacy of our anonymization efforts, the single missed frame renders the work done in the preceding 999 frames ineffective. With a precision of 99.99%, a similar situation arises only after approximately ~3 minutes.

In essence, relying solely on machine learning models for anonymization proves insufficient. Even with high precision, the intermittent occurrence of missed detections poses a significant challenge. Without additional algorithms to address these critical yet brief lapses in continuous object detection, achieving robust anonymization remains elusive, unless clients are willing to invest substantial sums for each video processed.

Tracking algorithms

To bridge these gaps, we'll employ BOTSORT, a multiple object tracking algorithm. Typically, such algorithms assign unique, persistent IDs to physically detected objects across consecutive frames of a video. Although the inner workings of these algorithms can be intricate, their workflow can be distilled into several key steps:

- Leveraging information from previous algorithmic steps, the tracker anticipates the locations of previously detected objects in the upcoming frame.

- The algorithm takes the bounding boxes detected by the machine learning model in the new frame as input.

- Utilizing a re-identification routine,

the algorithm matches the detected bounding boxes with the anticipated predictions from previous steps.

- Bounding boxes that were predicted but not successfully re-identified are marked as "lost." The tracking algorithm retains these lost detections for several subsequent frames, aiming to maintain tracking even if the object is temporarily obstructed.

- Bounding boxes that were predicted and successfully re-identified in the new frame have their IDs set consistently with those from previous frames.

- Bounding boxes that were not predicted but appear in the new frame initialize new tracks, from which detection predictions will emerge in subsequent steps.

While these steps lead to the consistent assignment of unique IDs to detections, they alone do not fully address the flickering issue. However, step 3.1. proves crucial in this regard by tracking lost detections for several additional frames. Extracting this data presents a significant opportunity: it enables us to predict the location of bounding boxes even when the machine learning model fails. Consequently, this capability dramatically reduces the flickering of bounding boxes, enhancing the overall robustness of the tracking system.

Implementation using the BOTSORT algorithm

To avoid implementing the algorithm manually, we found already established one on the GitHub. Integration of this algorithm into our workflow has been relatively seamless, although we encountered a compatibility issue with the detection class used in our Celantur software. As a workaround, we opted to inherit from this class, allowing us to tailor certain behaviors to align with our requirements:

import numpy as np

from BoT-SORT.tracker.bot_sort import BoTSORT

from celantur.detection import Detection as CelanturDetection

class BOTSORTWrapper(BoTSORT):

class mock_results:

'''

This class is used to pass the detections into the BoTSORT.update() method.

From the list of detections, one needs to fill out the necessary fields of this class:

* Bounding boxes list

* Confidence score list of corresponding bounding box

* Class/Type list of corresponding bounding boxes

'''

def __init__(self, boxes, scores, cls):

self.xyxy = []

for b in boxes:

self.xyxy.append(list(b))

self.xyxy = np.array(self.xyxy, dtype=np.float32)

self.conf = np.array(scores, dtype=np.float32)

self.cls = np.array(cls, dtype=np.int32)

def __init__(self, args, frame_rate=30):

super().__init__(args, frame_rate)

def update(self, detections: List[CelanturDetection], img: np.array):

'''

This function constructs the mock results object from the list of object detections.

'''

boxes = [d.box for d in detections]

scores = [d.score for d in detections]

classes = [d.type for d in detections]

results = BOTSORTWrapper.mock_results(boxes, scores, classes)

return super().update(results, img)

This BOTSORT implementation will create tracks from the detections and will keep them for the next 30 frames. The lost tracks and currently tracked tracks can be easily accessed:

tracker = BOTSORTWrapper(args)

lost_tracks = tracker.lost_stracks

tracked_tracks = tracker.tracked_stracks

One can use any logic he sees fit to blur/anonymise the tracks that are in the lost_tracks list.

In our case, we just converted them to the CelanturDetection objects and passed them to the next step of the anonymisation pipeline.

The video used in the example is a free-to-download stock video from vecteezy.com.

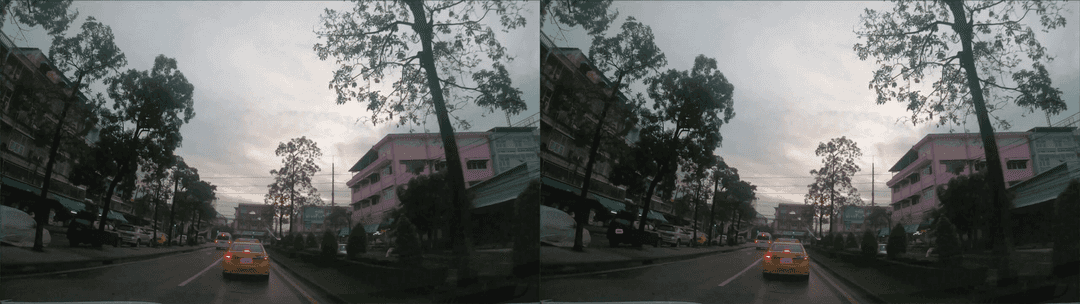

And the results are already promissing.

! Please note that the processing used to process the example is not representative of Celantur software precision. We had to artificially lower detection precision to have a good example of flickering/disappearing detections.

Indeed, upon closer inspection, it's evident that in the case of tracking, the bounding box on the black car on the left remains persistent across frames, whereas in the absence of tracking, the bounding box disappears after the first frame. However, a comprehensive review of the entire video reveals the myriad issues introduced by this approach. The most significant challenge arises when the object tracker fails to re-identify an object, resulting in the creation of a new track. This behavior can lead to fragmented and inaccurate tracking, undermining the effectiveness of the overall system.

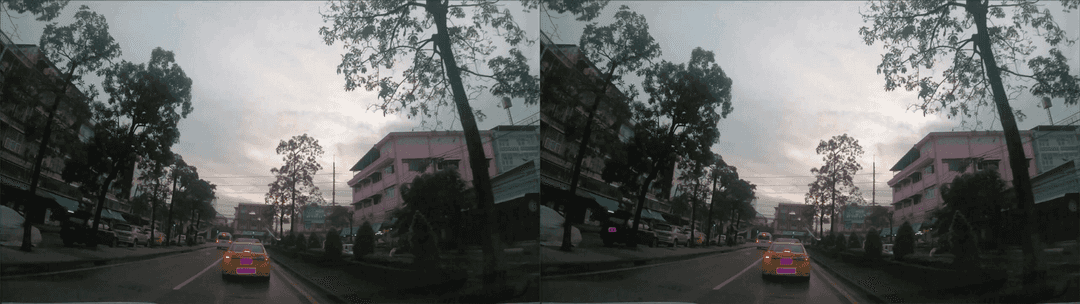

Analyzing these three frames, it's apparent that there are numerous persistent bounding boxes that are absent in the no-tracking scenario. These bounding boxes were initially detected but subsequently lost, yet they linger for approximately 30 frames (ca 1 second) afterward. In some instances, such as with the car on the left, these bounding boxes stem from correctly identified detections. However, in other cases, like the car on the right, the bounding boxes represent single false positives that persist for 30 frames. In essence, while the issue of flickering has been mitigated, a significant problem of false positives has been introduced. This phenomenon can lead to inaccuracies and inconsistencies in object tracking, posing a notable challenge for the overall system.

Fine tuning the results to get the best of both worlds

To enhance the accuracy of the results, we need to refine the algorithm responsible for determining whether to create a CelanturDetection from a lost track.

While maintaining the ability to track temporarily obscured objects for 30 frames, we aim to convert them into detections only if the following conditions are met:

- The track was lost less than 5 frames ago

- The track was detected at least 2 times

The first condition arises directly from our current problem. If an object remains undetected for several consecutive frames, it signifies a need for improvement in our object detection model. While it may seem ideal to substitute costly machine learning models with a more affordable tracking algorithm, this substitution isn't feasible.

The second condition is grounded in our business logic. Since we offer an anonymization service, we must balance the occurrence of false positives and false negatives. False negatives pose a greater risk as they can result in the inadvertent exposure of personal data. On the other hand, false positives primarily impact the aesthetics of the anonymized video and aren't as critical. Consequently, we prioritize minimizing false negatives. Given our expectation of encountering false positives, it's impractical to carry them forward for an extended period. To address this, we can implement a specific data structure that accompanies each track.

class TrackStatus():

tracked: bool = True # By default tracks are added when detected

n_det: int = 0 # Number of detections of this track in frames

n_lost = 0 # How many frames has passed since last detection

...

tracker_cache_update: Dict[int, TrackStatus] = {} # The dictionary to store track status for each track id

And then, during update of BOTSORT, we change these parameters correspondingly:

tracker.update(detections, image.data)

lost_tracks = tracker.lost_stracks

tracked_tracks = tracker.tracked_stracks

for track in tracked_tracks:

track_id = track.track_id

if track_id not in tracker_cache_update:

track_status = TrackStatus()

tracker_cache_update[track_id] = track_status

tracker_cache_update[track_id].tracked = True

tracker_cache_update[track_id].n_det += 1

tracker_cache_update[track_id].n_lost = 0

for track in lost_tracks:

track_id = track.track_id

tracker_cache_update[track_id].tracked = False

tracker_cache_update[track_id].n_lost += 1

Finally, based on these parameters, we make a decision whether we want to initialize a detection from the lost track using following logic:

track_status = tracker_cache_update[track_id]

is_detected_now = track_status.tracked

was_detected_often_enough = track_status.n_det >= TOTAL_DETECTION_THRESHOLD # Equals to 2 in our case

was_detected_recently = track_status.n_lost <= LAST_DETECTION_THRESHOLD # Equals to 5 in our case

should_initialize_detection = is_detected_now or (was_detected_often_enough and was_detected_recently)

if should_initialize_detection:

# Initialize the detection here

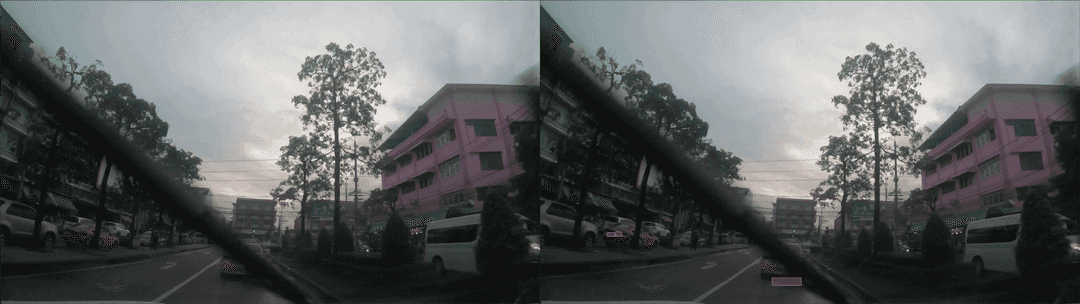

The results of the fine-tuned tracking algorithm are quite promising. The flickering is almost gone, the false positives are still present, but they are contained in the area where the object was initially detected:

For the end user, who isn't presented with detection boxes, the false positive occurrence on the left side of the image isn't particularly noticeable. When blurred, it simply appears as a slightly larger area being obscured than necessary. Further improvements are feasible from this point onward, but they necessitate extensive fine-tuning, testing, and enhancement of the machine learning model. The crucial consideration is to always balance between false positives and false negatives and adapt the algorithm accordingly.

Unsolved issues

The primary issue we encountered in our implementation pertained to scenarios where the object exhibited acceleration in its movement relative to the camera, measured in pixels per second.

If you recall high school physics, you might recognize the equations governing such motion, such as x(t) = x_0 + v_0*t + 0.5*a*t^2.

Our object tracking algorithm operates under the assumption of constant velocity, predicting the object's next location as x(t+1) = x(t) + v*t, where v is the velocity of the object.

However, when the object accelerates, this prediction becomes inaccurate.

This discrepancy arises due to the linear Kalman filter utilized within the BOTSORT algorithm. To address this issue, we could employ a non-linear Kalman filter capable of predicting object movement with acceleration. However, opting for the non-linear Kalman filter might come with a higher computational cost, rendering it unsuitable for use within the BOTSORT algorithm. This apprach needs yet to be tested.

The problem is not too common, but it can appear in the following situations:

- Physical acceleration of the object in physical space: This occurs when an object experiences acceleration in the real world. For instance, a car accelerating rapidly after a green light at an intersection or making a sharp turn, subjecting the object to physical acceleration.

- Acceleration of the object in image space: In this case, the object undergoes acceleration within the image itself. For example, when an object moves closer to the camera, it appears larger in the frame, causing the bounding box to shift more pixels with each frame. This situation often arises in dashcam videos capturing traffic moving in the opposite direction of the vehicle recording the video.

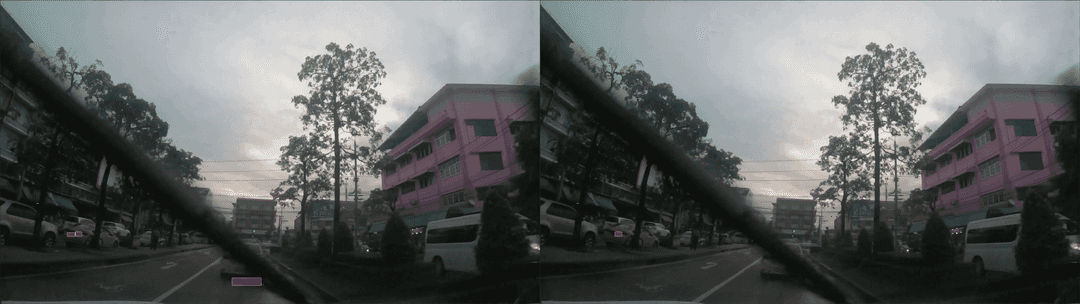

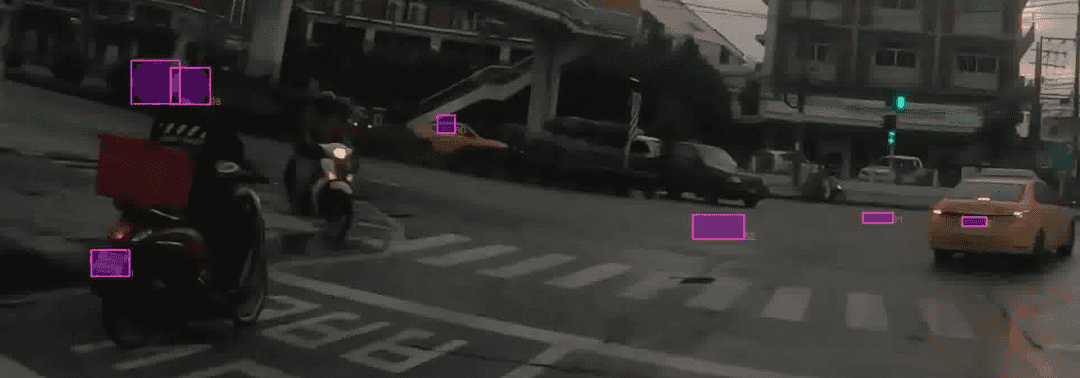

In each of these cases, if the acceleration occurs rapidly enough, the tracker may lose track of the object, resulting in multiple false positives. For instance, consider the image below, where three bounding boxes appear behind the yellow car as it makes a right turn (first case scenario, where the object experiences physical acceleration). And, two bounding boxes are visible near the head of the motorcyclist (second case scenario, where the object remains stationary but moves relative to the dashcam car with acceleration):

Conclusion

Using object tracking based on BOTSORT, we are able to overcome false negatives of the same real-world object that "slip through" our detection model in some frames of a video. It enables us to provide superior anonymisation in videos while mantaining high performance.

Ask us Anything. We'll get back to you shortly