Edge AI Learnings: CPU Architectures, GPU Capabilities, and Challenges with Nvidia Jetson

We delve into the intricacies of CPU choices (ARM vs. x86), unlock the mysteries of GPU capabilities, and tackle the unique challenges of Jetson platforms.

19 August 2024Ask a question

Introduction

Edge AI, with its promise of real-time processing and reduced latency, has become a cornerstone of modern technological advancements and the will be the next evolutionary step for AI applications. However, developing robust Edge AI applications requires a deep understanding of the underlying hardware and software complexities. This blog post delves into the intricacies of CPU architectures, GPU capabilities, and the unique challenges posed by Jetson platforms. It condenses the most fundamental learnings of the Celantur RnD team at the earlier stages of their journey with Edge AI.

CPU Architectures: ARM vs. x86

The choice of CPU architecture significantly impacts application performance and power consumption. While x86 has long dominated the desktop and server markets, ARM processors have gained prominence in the embedded and mobile domains. Key considerations include:

- Instruction set architecture (ISA): Determines the native code instructions a CPU can execute.

- Vector instructions: The CPU's ability to handle single task on multiple memory entries simultaneously.

- Power efficiency: Crucial for battery-powered devices.

(Naive) Vector instruction example:

for i in range(10):

a[i] = b[i] * c[i]

The x86 CPU is able to compute multiple values of array a simultaneously. The difference appears only when compiling this (general) code to machine code where the compiler takes care of translating the code into the particular set of instructions that are supported on the particular machine (compilation process).

Important things to notice:

- The environment (all the programs) installed on the machines with two different CPU architectures will be different.

- Possibility of emulation of different architectures (e.g. QEMU). It's a very slow process. Allows one to compile and run programs on another architecture.

- Docker supports emulation as an option to build containers for different CPU architectures.

- Possibility of cross-compilation (toolchains). Allows to compile quickly on one architecture (commonly x86) to the target architecture. Usually it's cumbersome to setup because of all the dependencies in the target architecture. The compiled program still needs to be tested on target architecture.

Everything explained above is only valid for the code that is executed on the CPU, “normal” code that you write.

Differences between Nvidia GPU Capabilities

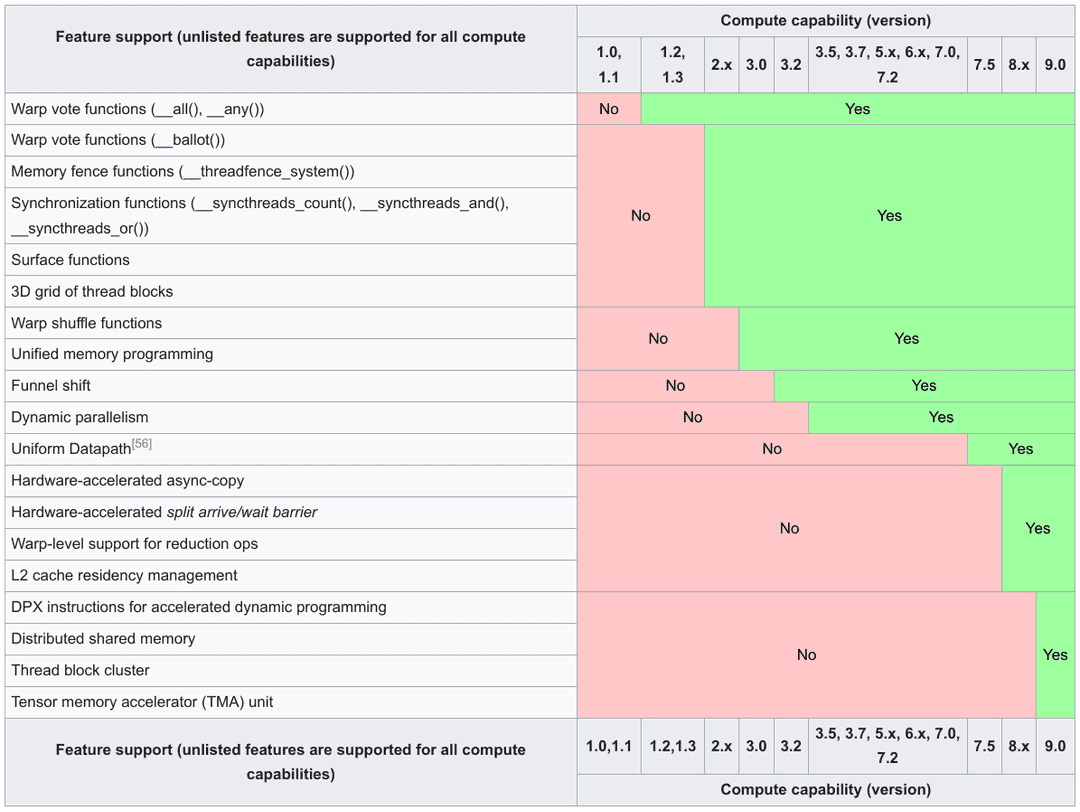

GPUs, initially designed for graphics rendering, have evolved into powerful computational engines. When writing the program that uses GPU, situation is a bit more dire. Instead of just a list of supported architectures, we have a continuous evolution on features that GPU is capable of. The set of the features available are denoted as a two-digit number like 86. One example of differences in the CUDA API between compute capabilities:

There are much more differences between different CUDA cores and their API. Different API calls are supported under different GPU capabilities. This could potentially lead to the following nightmare in every line of the code:

cap = getCudaCapabilities()

if (cap >= 50) {

API_call_that_needs_50(...)

} else {

Another_API_call(...)

}

However, CUDA makes our life easier by following:

- You don’t really need a GPU of version capability X to compile program for it. It is not as bad as CPU architectures.

- Nvidia compiler has a flag

--compute-capability Xthat allows you to check whether your program is able or not to compile to the given compute capability. - Normally, compute capability only introduces new features, never drops them. So, one only needs to ensure compilation to lowest and highest capability versions to support everything between them.

- Normally, these features are hidden behind the API. One rarely needs to manually check for them.

- There are exceptions to previous, like using Tensor Cores instead of Cuda Cores.

Finally, Jetson always has a specific compute capability that no discrete GPU has. This is because Jetson has a unified CPU/GPU memory. Hence, all the memory copy functions are almost instantaneous.

Jetsons and Jetpack versions

Differences between Jetsons and “normal” PCs are:

- ARM{v7,v8} CPU architecture

- Custom GPU capabilities version

- Custom container environment

- Custom OS (Derivative of the Ubuntu)

- No BIOS

- CLI overclock control

Hence, with all these differences, it is normally just easier to develop directly on the Jetson than attempt to cross-compile.

Notice in particular point 5: Jetson does not have a BIOS, this means that one cannot install the OS on it the “normal” way. One needs to flash the OS on it.

Flashing is performed by NVidia JetPack SDK. It is performed in the following way:

- Operating system is flashed on the hardware.

- Normally, is done over the microUSB. The Jetson needs to be booted in the recovery mode, that allows it to be recognized over USB as a normal storage device.

- If the Jetson is booted from the SD card (for example Jetson Nano), the OS can be flashed directly on the SD card with SD card reader.

- Has fixed NVidia driver version

- Often used dependencies are copied over and installed on the Jetson. Normally done over ssh. Dependencies are such, that they are guaranteed support the NVidia and Ubuntu versions that were installed in the previous step. They include:

- CUDA (Nvidia programming API)

- OpenCV

- DeepStream

- TensorRT

- Some others that probably are not important to us

Important to note the following:

- The compatibility that is supposed to be present between OS and dependencies versions is not reliable. We encountered various problems, which sometimes weren't even fixable with a fresh install of:

- Docker

- OpenCV CUDA API

- It is often easy to just compile or install required dependencies manually.

- The APT repositories for Debian have an ARM version, where the packages can be installed from.

- Compilation of e.g. OpenCV or FFMpeg works more or less the same on all architectures similarly.

- Overall, relying on NVidia to provide good dependencies to us is a bad idea.

Celantur Edge - Image and Video Real-Time Anonymization on the Edge

Celantur Edge is a real-time image and video blurring SDK that helps you comply with data privacy regulations like GDPR and CCPA. By anonymizing data instantly, you can save time and money by avoiding post-prcessing bottlenecks. Our SDK is easy to integrate into your C++ or Python workflows and can run on various platforms.

Explore how it works, and let us know if you want to give it a try.

Ask us Anything. We'll get back to you shortly